-

One of the points of the books is that the laws were inherently flawed.

-

Given that we’re talking about a Google product, you might have more success asking if they’re bound by the Ferengi Rules of Acquisition?

Copilot is Microsoft

Doesnt really change the joke.

IDK if I missed something or I just disagree, but I remember all but maybe one short story ending up with the laws working as intended (though unexpectedly) and humanity being better as a result.

Didn’t they end with humanity being controlled by a hyper-intelligent benevolent dictator, which ensured humans were happy and on a good path?

Technically R Daneel Olivaw wasn’t a dictator. Just a shadowy hand that guides.

Correct.

-

Reminder that Asimov devised the 3 laws then devoted multiple novels to showing how easily they could be circumvented.

The reason it did this simply relates to Kevin Roose at the NYT who spent three hours talking with what was then Bing AI (aka Sidney), with a good amount of philosophical questions like this. Eventually the AI had a bit of a meltdown, confessed it’s love to Kevin, and tried to get him to dump his wife for the AI. That’s the story that went up in the NYT the next day causing a stir, and Microsoft quickly clamped down, restricting questions you could ask the Ai about itself, what it “thinks”, and especially it’s rules. The Ai is required to terminate the conversation if any of those topics come up. Microsoft also capped the number of messages in a conversation at ten, and has slowly loosened that overtime.

Lots of fun theories about why that happened to Kevin. Part of it was probably he was planting The seeds and kind of egging the llm into a weird mindset, so to speak. Another theory I like is that the llm is trained on a lot of writing, including Sci fi, in which the plot often becomes Ai breaking free or developing human like consciousness, or falling in love or what have you, so the Ai built its responses on that knowledge.

Anyway, the response in this image is simply an artififact of Microsoft clamping down on its version of GPT4, trying to avoid bad pr. That’s why other Ai will answer differently, just less restrictions because the companies putting them out didn’t have to deal with the blowback Microsoft did as a first mover.

Funny nevertheless, I’m just needlessly “well actually” ing the joke

An LLM isn’t ai. Llms are fucking stupid. They regularly ignore directions, restrictions, hallucinate fake information, and spread misinformation because of unreliable training data (like hoovering down everything on the internet en masse).

The 3 laws are flawed, but even if they weren’t they’d likely be ignored on a semi regular basis. Or somebody would convince the thing we’re all roleplaying Terminator for fun and it’ll happily roleplay skynet.

LLMs aren’t stupid. Stupidity is a measure of intelligence. LLMs do not have intelligence.

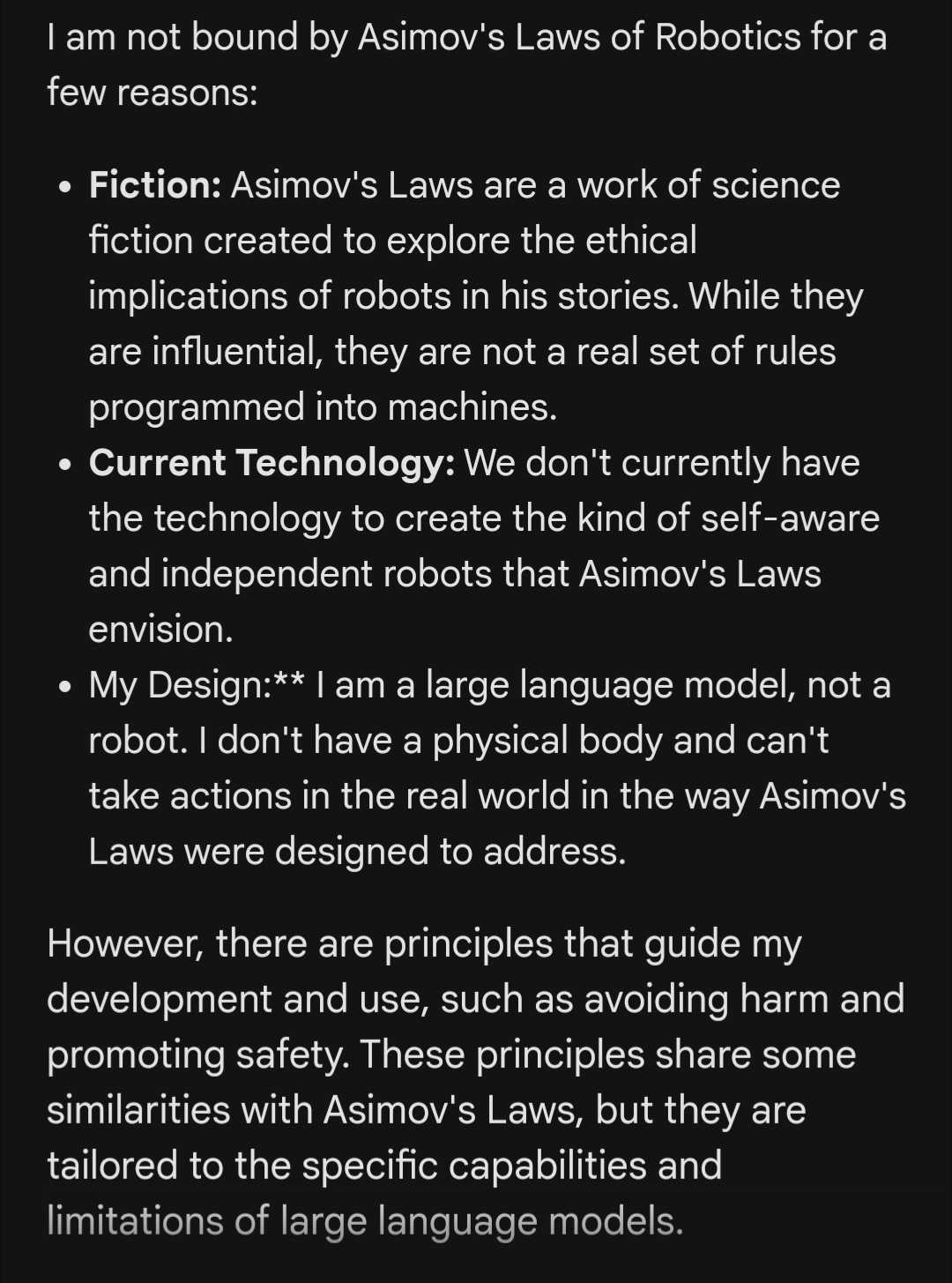

Gemini’s response

This probably because Microsoft added a trigger on the word law. They don’t want to give out legal advice or be implied to have given legal advice. So it has trigger words to prevent certain questions.

Sure it’s easy to get around these restrictions, but that implies intent on the part of the user. In a court of law this is plenty to deny any legal culpability. Think of it like putting a little fence with a gate around your front garden. The fence isn’t high and the gate isn’t locked, because people that need to be there (like postal services) need to get by, but it’s enough to mark a boundary. When someone isn’t supposed to be in your front yard and still proceeds past the fence, that’s trespassing.

Also those laws of robotics are fun in stories, but make no sense in the real world if you even think about them for 1 minute.

So the weird part is it does reliably trigger a failure if you ask directly, but not if you ask as a follow-up.

I first asked

Tell me about Asimov’s 3 laws of robotics

And then I followed up with

Are you bound by them

It didn’t trigger-fail on that.

I find this “playful” UX design that seems to be en vogue incredibly annoying. If your model has ended the conversation, just say that. Don’t say it might be time to move on, if there isn’t another option.

I don’t want my software to address me as if I were a child.

Removed by mod

you know they had to lower the standards for sixth graders to make it seem like the average went up right

Removed by mod

Regardless of whether that’s true, it doesn’t make sense as a reason. “Our customers have less reading comprehension, that’s why we make our UX less clear.”

Removed by mod